Immobiliare.it is one of Italy's leading online real estate platforms.

With a vast array of listings that include residential, commercial, and vacation properties, the website serves as a comprehensive resource for anyone looking to invest in Italian real estate.

For American realtors and property buyers, Immobiliare.it offers a unique opportunity to access a diverse market that can be otherwise challenging to navigate from overseas.

Importance of Real Estate Data

In the real estate industry, data is king. Accurate and timely information can make the difference between a successful investment and a costly mistake.

For American realtors and property buyers, understanding the Italian market requires a reliable source of data.

Immobiliare.it provides this in the form of property listings, price trends, neighborhood insights, and more.

The platform's robust search filters allow users to narrow down their options based on various criteria, such as location, property type, and price range, making it easier to find properties that meet specific investment goals.

Use-Cases for Real Estate Data from Immobiliare.it

-

Market Research. American realtors can use the data to understand the Italian real estate market better, identifying trends and opportunities that could benefit their clients.

-

Investment Decisions. Property buyers from the United States can use Immobiliare.it to make informed investment decisions. T

he platform's data can help identify undervalued properties, up-and-coming neighborhoods, or lucrative vacation rentals.

-

Comparative Analysis. The data can be used to compare the Italian market with the American market.

This is particularly useful for investors looking to diversify their portfolio internationally.

-

Client Services. Realtors can offer additional services to their American clients interested in the Italian market, such as customized reports and analyses based on data from Immobiliare.it.

-

Marketing Strategy. By scraping data from Immobiliare.it, businesses can identify current trends in property features and locations, aiding in the formulation of a more effective market strategy.

This approach can make real estate property websites more competitive in the market.

-

Pricing Analysis. Scraping price data and price history from Immobiliare.it allows for a comprehensive pricing analysis.

Understanding market price fluctuation, fresh prices, and current market prices can help businesses and consumers make more informed decisions.

-

Legal and Regulatory Insights. Immobiliare.it often features articles and resources that explain Italy's real estate laws and regulations, which can be invaluable for American investors unfamiliar with the Italian legal landscape.

By leveraging the data and resources available on Immobiliare.it, American realtors and property buyers can gain a competitive edge in a market that is both challenging and rewarding.

Legal Considerations

When it comes to gathering data from online platforms, it's crucial to understand the legal and ethical landscape to ensure you're operating within the bounds of the law and respecting the rights of the data owners.

Is it Legal to Scrape Immobiliare.it?

Before scraping any website, it's crucial to consult its robots.txt file, which outlines the rules for web crawlers.

Immobiliare.it's robots.txt file includes several "Disallow" directives, indicating the areas of the website that should not be scraped.

For example, it disallows scraping of search maps, user areas, and specific types of listings among other things.

Violating these rules could result in being banned from the site or facing legal action.

Therefore, it's essential to adhere to the guidelines set forth in the robots.txt file when considering any data gathering activities on Immobiliare.it.

Ethical Considerations

Beyond the legal aspects, ethical considerations also come into play when scraping data from websites.

Ethical web scraping respects both the letter and the spirit of the law, as well as the website's robots.txt file, which outlines the areas of the site that should not be scraped.

Additionally, ethical scraping involves not overloading the website's servers, respecting user privacy, and using the data responsibly.

Failure to adhere to these ethical guidelines can harm your reputation and could also have legal repercussions.

Insights on Web Scraping Legality

Here are three articles that discuss the legality of web scraping along with their summaries:

The U.S. Ninth Circuit of Appeals has ruled that scraping publicly accessible data is not a violation of the Computer Fraud and Abuse Act (CFAA). This ruling is significant for archivists, academics, researchers, and journalists who use web scraping tools.

However, the article also mentions that there have been cases where web scraping has raised privacy and security concerns. The case was initially brought by LinkedIn against Hiq Labs, a company that scraped LinkedIn user profiles. LinkedIn lost the case in 2019, and the Ninth Circuit reaffirmed its original decision.

This article states that there are no federal laws against web scraping in the United States as long as the scraped data is publicly available.

However, it emphasizes the importance of ethical considerations and advises web scrapers to operate within the boundaries of terms of service and robots.txt files of websites.

The article discusses that under most laws, personally identifiable information (PII) is illegal to collect, use, or store without the owner's explicit consent.

It also mentions that web scraping can sometimes fall into legal grey areas, especially when it involves collecting sensitive information.

Tools and Technologies Used for Data Gathering

In the realm of web scraping, a variety of tools and technologies are available to suit different needs. Whether you're a beginner or an experienced data scientist, there's likely a tool that fits your project requirements. Below, we explore some of the most commonly used tools.

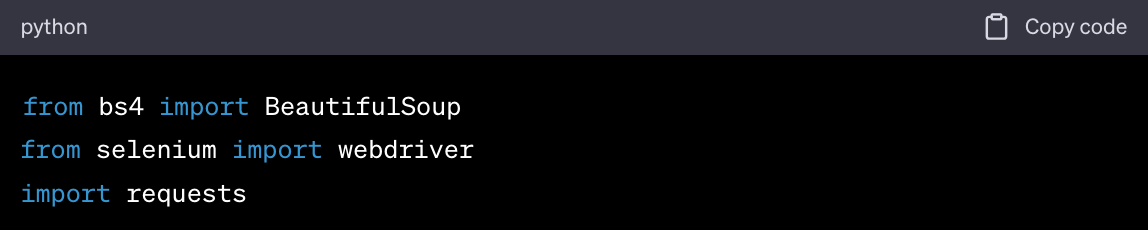

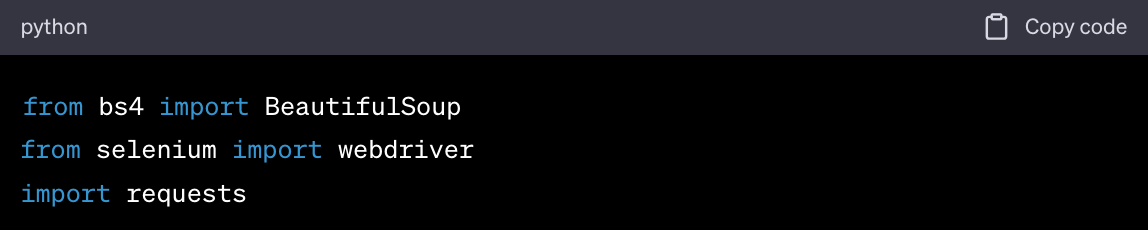

Python Libraries (BeautifulSoup, Selenium)

Python is a popular language for web scraping, and it offers several libraries to make the process easier:

-

BeautifulSoup: This is a Python library for pulling data out of HTML and XML files. It provides Pythonic idioms for iterating, searching, and modifying the parse tree.

BeautifulSoup is often used for web scraping purposes to pull the data from web pages and is relatively easy to learn for beginners.

-

Selenium: Unlike BeautifulSoup, which only scrapes the source code, Selenium interacts with the web page just like a human user.

It is often used for scraping dynamic websites where the content is loaded via JavaScript and can also be used for automated testing.

Both libraries can be used in conjunction, providing a powerful toolkit for any web scraping project.

Web Scraping Services

Web scraping services provide a convenient alternative for those who prefer not to delve into coding.

These services often come with intuitive user interfaces that allow you to specify what data you want to scrape, without the need to write any code.

They handle everything from data extraction to storage, making it easier for you to focus on analyzing and utilizing the data. P

GitHub Repositories for Reference

GitHub is a treasure trove of resources for web scraping. You can find numerous repositories that offer pre-written scraping scripts, frameworks, and tutorials.

Here are some types of repositories you might find useful:

-

Tutorial Repos. These repositories provide step-by-step guides and code snippets to help you get started with web scraping.

-

Framework Repos. These contain pre-built frameworks designed to scrape specific types of websites or data.

-

Project Repos. These are full-fledged projects that demonstrate more complex scraping tasks, often involving multiple websites or technologies.

By studying these repositories, you can gain insights into best practices and advanced techniques for web scraping.

Step-by-Step Guide

This section provides a comprehensive guide on how to gather data from Immobiliare.it using Python libraries and other tools.

Follow these steps to set up your environment, write the code, and find GitHub examples for further learning.

Setting Up the Environment

-

Install Python. If you haven't already, download and install Python from the official website.

-

Install Libraries. Open your terminal and install BeautifulSoup and Selenium by running the following commands:

-

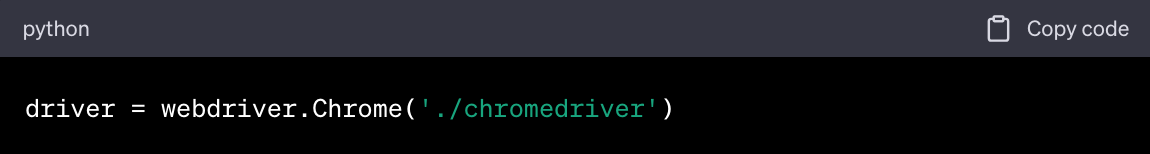

Web Driver. Download the web driver compatible with your browser (e.g., ChromeDriver for Chrome) and place it in your project directory.

-

API Key for Geonode. If you're using Geonode's Pay-As-You-Go Scraper, sign up for an API key from their website.

Writing the Code to Use for Gathering Data from Immobiliare.it

-

Import Libraries. Import the necessary Python libraries at the beginning of your script.

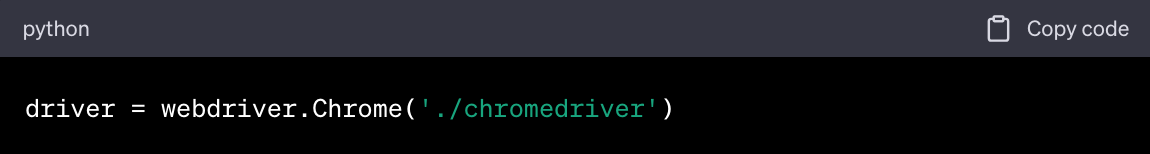

-

Initialize WebDriver. Initialize the Selenium WebDriver.

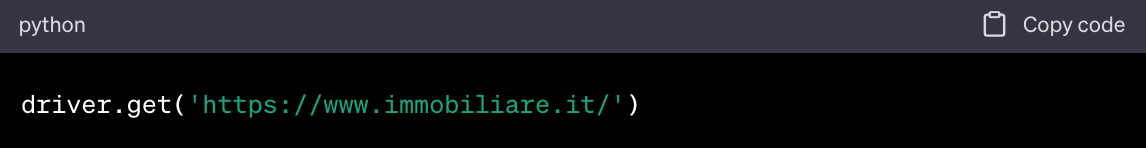

-

Navigate to Immobiliare.it. Use the WebDriver to navigate to the Immobiliare.it webpage you want to scrape.

-

Locate Elements. Use BeautifulSoup or Selenium functions to locate the HTML elements containing the data you want to scrape.

-

Scrape Data. Extract the data and store it in a variable or file.

-

Geonode API. If using Geonode, make an API call to handle proxies, browsers, and captchas.

-

Close WebDriver. Don't forget to close the WebDriver once you're done.

GitHub Examples and Tutorials

-

Search for Repos. Use GitHub's search functionality to find repositories related to web scraping with Python, BeautifulSoup, and Selenium.

-

Study the Code. Look for examples that are specifically designed for real estate websites or Immobiliare.it.

-

Follow Tutorials. Some GitHub repositories offer step-by-step tutorials that can help you understand the code better.

-

Clone and Experiment. Don't hesitate to clone repositories and tweak the code to suit your specific needs.

Advanced Techniques

As you become more comfortable with basic web scraping techniques, you may find the need to tackle more complex challenges. This section outlines some advanced techniques that can help you navigate these more complicated scenarios.

Handling Dynamic Websites

Dynamic websites load content asynchronously, often using JavaScript. This can make scraping more challenging. Here are some advanced techniques for handling dynamic websites:

-

Selenium WebDriver. As mentioned earlier, Selenium can interact with dynamic web pages, allowing you to scrape data that is loaded via JavaScript.

-

Headless Browsing. Use headless browsers like PhantomJS or run Selenium in headless mode to scrape websites without opening an actual browser window.

-

AJAX Requests. Some websites load data via AJAX requests. You can use developer tools to inspect these requests and replicate them using Python's requests library.

-

Wait Commands. Use explicit and implicit wait commands in Selenium to ensure that the web page has fully loaded before scraping.

Scraping Real Estate Agent Data

Scraping data related to real estate agents can be a bit more complex due to the need for accuracy and the ethical considerations involved. Here are some tips:

-

Target Specific Sections. Use BeautifulSoup or XPath expressions to target specific sections of a website where agent data is stored.

-

Pagination. Many websites list agents across multiple pages. Make sure your code can navigate through pagination.

-

Rate Limiting. Be respectful of the website's terms and conditions. Implement rate limiting in your code to avoid overloading their servers.

-

Data Validation. Always validate the data you've scraped to ensure it's accurate and complete.

StackOverflow Insights on Handling Changing Codes

Websites frequently update their HTML structure, which can break your scraping code. Here's how you can adapt:

-

Regular Updates: Keep an eye on StackOverflow threads related to the website you're scraping. Users often share updates on changes to website structures.

-

Error Handling: Implement robust error handling in your code to alert you when the scraping fails, allowing you to update your code accordingly.

-

Community Insights: StackOverflow is a great place to find workarounds or alternative methods for scraping when a website changes its code structure.

By mastering these advanced techniques, you'll be better prepared to tackle a wide range of web scraping challenges. Whether you're dealing with dynamic content, specialized data, or changing website structures, these methods will equip you with the skills you need for effective data gathering.

Data Analysis and Utilization

Once you've successfully gathered data, the next step is to analyze and utilize it effectively.

This section will guide you through the process of analyzing the scraped data, building your own pricing model, and creating as well as updating offer profiles.

How to Analyze the Scraped Data

-

Data Cleaning. The first step in any data analysis process is to clean the data. Remove any duplicates, handle missing values, and convert data types if necessary.

-

Data Visualization. Use libraries like Matplotlib or Seaborn to visualize the data. This can help you identify trends, outliers, or patterns.

-

Statistical Analysis. Use statistical methods to summarize the data. Calculate means, medians, standard deviations, etc., to get a better understanding of the data distribution.

-

Text Analysis. If you've scraped textual data, consider using Natural Language Processing (NLP) techniques to extract insights.

-

Correlation Analysis. Identify variables that are strongly correlated with each other. This can be particularly useful in real estate for understanding how different factors affect property prices.

Common Challenges and Solutions

Web scraping is not without its challenges. From CAPTCHAs and rate limits to concerns about data quality and reliability, this section aims to prepare you for some of the hurdles you might face and offer solutions to overcome them.

CAPTCHAs

-

- Problem: Many websites employ CAPTCHAs to prevent automated scraping.

- Solution: You can use services like 2Captcha or Anti-Captcha to solve CAPTCHAs automatically. Alternatively, Selenium can be used to manually solve the CAPTCHA during the scraping process.

Rate Limits

-

- Problem: Websites often have rate limits to restrict the number of requests from a single IP address.

- Solution: Implement rate limiting in your code to respect the website's terms. You can also use proxy services to rotate IP addresses. Geonode's residential proxies are an excellent service for this purpose.

Incomplete Data

-

- Problem: Sometimes the scraped data may be incomplete due to dynamic loading or other issues.

- Solution: Use Selenium to ensure that the page is fully loaded before scraping. You can also use its wait functions to pause the code until certain elements are loaded.

Inconsistent Data

-

- Problem: The structure of the website may change over time, leading to inconsistent data.

- Solution: Regularly update your scraping code to adapt to any changes in the website's structure. Implement robust error handling to alert you when the scraping fails.

Data Validation

-

- Problem: Scraped data may contain errors or inaccuracies.

- Solution: Always validate your data against multiple sources or use checksums to ensure data integrity.

Legal and Ethical Concerns

-

- Problem: Scraping data may sometimes be against the terms of service of the website.

- Solution: Always read and adhere to the website's terms of service and robots.txt file. Be respectful of the website's rules and policies.

By being aware of these common challenges and their solutions, you'll be better prepared to tackle any issues that come your way during your web scraping endeavors.

People Also Ask

How can I scrape real estate websites without coding?

You can use no-code web scraping tools like WebHarvy, Octoparse, or Import.io. These tools offer user-friendly interfaces for point-and-click data extraction.

What are the best Python libraries for web scraping?

The most commonly used Python libraries for web scraping are BeautifulSoup for parsing HTML and XML, and Selenium for interacting with JavaScript-heavy websites. Scrapy is another option for more complex projects.

Is web scraping legal?

The legality of web scraping depends on the website's terms of service and how you use the data. Always consult the website's terms and conditions and consider seeking legal advice to ensure compliance.

Further Resources

After journeying through the various aspects of web scraping, from setting up your environment to overcoming challenges, it's time to conclude and look at what comes next.

Official Documentation. Always a good starting point. BeautifulSoup, Selenium, and other libraries have extensive documentation to help you understand their functionalities better.

Online Courses. Websites like Udemy, Coursera, and edX offer courses on web scraping and data analysis.

YouTube Tutorials. There are countless tutorials available that cover everything from the basics to advanced techniques.

Forums and Communities. Websites like StackOverflow and Reddit have active communities where you can ask questions and share knowledge.

Books. There are also several books available that focus on web scraping and data gathering techniques.

Wrapping Up

Now that you're equipped with the knowledge and tools needed for effective web scraping, it's time to put them into practice.

Start small, experiment, and don't hesitate to dive into more complex projects as you gain confidence.

Remember, the key to successful web scraping is not just gathering data, but turning it into actionable insights.