What Is Data Extraction?

Data extraction involves obtaining information from websites, databases, and documents. According to IDC, the growing volume of data, set to reach 175 zettabytes by 2025, makes data extraction crucial. This is why many businesses automate their data extraction workflow using web scraping APIs, as it allows them to collect, process, and analyze large amounts of data efficiently. Through web scraping, businesses can easily access and analyze the data they need to drive more informed decisions, growth, and success in today’s data-driven world.

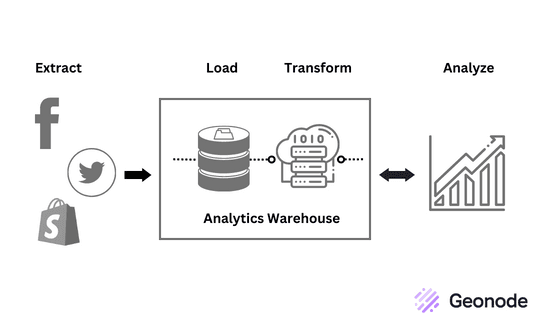

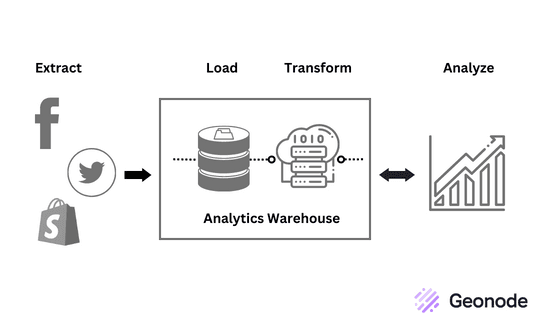

Data Extraction Using ETL

Extraction, Transformation, and Loading (ETL) is a process used to integrate and manage large amounts of data from various sources. It is a process commonly used in data extraction and web scraping to gather, clean, and organize data for analysis.

The ETL process involves three main stages:

-

Extraction: The first stage involves extracting or pulling data from various sources such as databases, files, or APIs. The extracted data can be structured, semi-structured, or unstructured.

-

Transformation: The second stage involves transforming the extracted data into a format suitable for the target system, such as a data warehouse or a reporting tool. This stage involves data cleaning, filtering, aggregation, and enrichment to ensure consistency and accuracy. This stage also involves converting the data into an easily understood standard format.

-

Loading: The final stage involves loading the transformed data into the target system for further analysis and processing. The data is loaded into a database or a data warehouse where other applications can easily query and analyse it.

Data Extraction without ETL

Although data extraction can be performed outside of ETL, it has limitations and must be used accordingly. For example, if raw data is extracted without proper transformation and loading, it may be challenging to organize and analyze, and incompatible with newer programs and applications. Consequently, this data could have limited value except for archival purposes.

However, data extraction without ETL is still an excellent option for businesses needing to access data from multiple sources quickly. It is also a cost-effective solution, eliminating the need for expensive ETL software and hardware.

Additionally, it can be used to quickly and easily move data from one system to another without the need for complex transformations.

Depending on your needs and available resources, you can find several alternative data extraction techniques that do not rely on traditional ETL.

Change Data Capture (CDC)

Change Data Capture (CDC) is a method of ETL that captures and stores changes made to data in a source system for analysis. Unlike traditional ETL, which extracts entire datasets, CDC only captures the changes made to the data since the last extraction. This makes it a more efficient method for extracting data.

Application Programming Interface (API)

Another data extraction method without ETL is API (Application Programming Interface). APIs are a set of protocols and tools for building software applications. With APIs, developers can programmatically extract data from a source system and make it available in their applications.

Many popular applications, such as Google Maps and Twitter, provide APIs that can be used for data extraction.

Web Scraping

Web scraping is another method to extract data without ETL. This involves using automated scripts to extract data from web pages. While web scraping has some limitations, such as being vulnerable to changes in the web page structure, it can be a powerful tool for extracting data from websites.

While ETL is the most commonly used method for data extraction, alternative methods can be just as effective. Change Data Capture, APIs, and web scraping are all viable options for extracting information from various sources. It is essential to evaluate which method is best suited for a particular use case and to stay up to date with the latest techniques and technologies to find which one best suits your needs.

Also Read: Know The Difference Between a Web Scraper and a Web Crawler

Benefits of Data Extraction

Reduces human error

Data extraction automates the process of collecting data, minimizing the chances of errors that can occur when manually inputting data. As a result, it improves the accuracy and consistency of data.

Boosts productivity

Data extraction frees up time for employees to focus on other tasks, increasing their productivity. The process is also faster than manual data entry, enabling businesses to process more data in less time.

Enables better business decisions

Data extraction gives businesses access to a wealth of information, allowing them to make data-driven decisions. This leads to better customer service, improved products and services, and increased revenue.

Provides visibility

Data extraction provides visibility into business operations, enabling businesses to identify inefficiencies, growth opportunities, and improvement areas.

Simplifies sharing

Data extraction enables businesses to easily share data between departments and external stakeholders, improving collaboration and communication.

Cost-effective

Data extraction is a cost-effective solution for businesses as it eliminates the need for manual data entry and reduces the likelihood of errors, which can be expensive.

Time-saving

Data extraction automates the collecting and processing of data, saving businesses valuable time so they can focus on other important tasks.

Data Extraction: Real-World Use Cases

Data extraction has several real-world use cases across different industries. Here are some of the most common use cases for data extraction:

Reputation Monitoring

Companies need to monitor their online reputation to protect their brand. Data extraction helps track brand mentions, identify negative reviews, and gather customer feedback to improve the brand's image.

Market Research

Data extraction helps companies gather data on market trends, consumer behavior, and industry insights. This is used to make informed decisions about product development, marketing strategies, and business expansion.

Financial Data

Financial institutions use data extraction to collect and analyze data from various sources, including financial statements, news articles, and market reports, to make informed investment decisions and identify market trends.

Lead Generation

Data extraction helps generate leads by collecting contact information and other relevant data from social media profiles, company websites, and other online sources.

Content Aggregation

To create comprehensive and up-to-date news stories, news and media companies use data extraction to gather information from various sources, such as news articles, blog posts, and social media.

Sentiment Analysis

Businesses use data extraction to monitor customer sentiment by collecting and analyzing comments, reviews, and feedback. This helps companies improve their products and services and build better customer relationships.

Pricing Intelligence

Retailers use data extraction to collect information on competitor pricing, promotions, and discounts to adjust their own pricing strategies and gain a competitive edge.

Job Market Analysis

Recruitment agencies and job boards use data extraction to collect information on job postings, job descriptions, and required skills, helping job seekers find relevant job openings and employers identify potential candidates.

Social Media Analysis

Social media platforms use data extraction to analyze user behavior and preferences, identify influencers, and provide personalized recommendations to users.

Travel Industry

Travel agencies and booking platforms use data extraction to gather information on flights, hotels, and travel destinations, enabling them to offer more personalized travel packages to their customers.

Exploring Different Types of Extracted Data

During data extraction, different types of data can be extracted depending on the nature of the data and the purpose of the extraction. Let's explore some of the types of extracted data during the data extraction process.

Unstructured Data

Unstructured data refers to data not organized in a predefined manner, such as emails, social media posts, or text documents. Unstructured data can be challenging to analyze because it does not have a consistent structure.

However, with the help of natural language processing techniques, unstructured data can be transformed into a structured format for more straightforward analysis and decision-making.

Structured Data

Structured data, on the other hand, is organized in a predefined manner, such as in a spreadsheet or database. Structured data is easier to analyze because it has a consistent structure, which makes it easier to search, filter, and aggregate.

Structured data can be further categorized into different data types, such as customer, financial, and operational data.

Customer Data

Customer data includes information about customers, such as their demographics, purchase history, and preferences. Customer data is used to improve customer engagement, develop targeted marketing campaigns, and optimize the customer experience.

Examples of customer data sources include customer surveys, social media activity, and website analytics.

Financial Data

Financial data refers to information related to a company’s finances, such as revenue, expenses, and profit margins. Financial data is used to track financial performance, identify areas for cost savings, and make informed financial decisions.

Examples of financial data sources include accounting software, financial statements, and transactional data.

Operational Data

Operational data refers to information related to a business’s day-to-day operations, such as inventory levels, production output, and customer service metrics. Operational data is used to identify areas for process improvement, optimize resource allocation, and enhance overall efficiency.

Operational data sources include manufacturing equipment sensors, point-of-sale systems, and help desk software.

Data extraction can yield different types of data, including unstructured and structured data. Extracting and analyzing these data types can provide valuable insights for organizations to make informed decisions and drive business success.

Data Extraction Techniques

There are two main types of extraction methods in data warehousing: logical extraction and physical extraction.

Logical Extraction

Logical extraction is a method of data extraction that involves using logical rules and transformations to extract data from various sources and integrate it into a common format in a data warehouse. There are three primary methods in logical extraction: update notification, incremental extraction, and full extraction.

Update Notification

This method involves extracting only the data that has been updated in the source system since the last extraction. The source system notifies the data warehouse of the changes, and only the updated data is extracted. This is beneficial when real-time data integration is required, and only the most recent data is needed for analysis.

Incremental Extraction

This involves extracting only the data that has been added, updated, or deleted in the source system since the last extraction. The extraction is based on a timestamp or a flag that indicates when the data was last modified. This is often used when a significant volume of data is involved, and it is not practical to extract all the data every time.

Full Extraction

Full extraction involves extracting all the data from the source system, regardless of whether or not it has changed since the last extraction. When the volume of data is small or the source system is relatively stable, and the data warehouse needs a complete copy of the source data, the full extraction method is the go-to.

Physical Extraction

On the other hand, physical extraction is another method of data extraction in data warehousing that involves physically copying or moving data from the source system to the data warehouse. Physical extraction has two primary methods: online extraction and offline extraction.

Online extraction

Online extraction involves extracting data from the source system while it is still operating. This is useful when the data is needed in real-time, and the source system cannot be taken offline. However, the extraction process may impact the performance of the source system, which is an important consideration when choosing this method.

Offline Extraction

This involves extracting data from the source system when it is not in operation. It is often used when the data can be extracted during periods of low activity or when the data does not need to be extracted in real-time. The extraction process does not impact the performance of the source system, which is a great advantage when compared to online extraction.

Overall, these methods have their benefits and drawbacks. Choosing a method depends on the specific needs of your business and the nature of the data being extracted. Carefully selecting the appropriate method ensures that the data in your data warehouse is accurate, up-to-date, and available for analysis.

Data Extraction VS Data Mining

Like data extraction, data mining is another crucial step in analyzing and utilizing large amounts of data. However, while both are used to extract valuable insights and knowledge from data, there are significant differences between the two.

Data Extraction

Data extraction is the process of extracting data from various sources and consolidating it into a central location, such as a data warehouse.

This involves identifying the relevant data sources, applying data cleansing and transformation techniques, and loading the data into a centralized database. The goal of data extraction is to create a complete and accurate copy of the data that can be easily accessed and analyzed.

Data Mining

On the other hand, data mining is the process of discovering patterns, trends, and insights in the data that has been extracted.

This process involves using statistical analysis and machine learning techniques to identify correlations, classifications, and other information to make informed business decisions.

Data mining aims to turn raw data into actionable knowledge and use it to improve business performance and gain a competitive advantage.

Simply put, data extraction is the process of getting data from various sources and putting it all in one place. In contrast, data mining analyses that data to find meaningful patterns and insights.

Both are essential steps in data analysis, and they are often used together to provide a comprehensive view of a business’s data.

By using these techniques, businesses can gain valuable insights into their customers, operations, and market trends, which can help them make better decisions and stay ahead of the competition.

Different Types of Data Extraction Tools

Data extraction tools are software applications that facilitate extracting data from various sources and consolidating it into a central location, such as a data warehouse. These tools are designed to automate the data extraction process and make it more efficient and accurate.

Different types of data extraction tools are available in the market, each with its own features and benefits. Below are three common types:

Batch Processing Tools

These tools are designed to extract data from large volumes of files or documents in a batch mode. Batch processing tools are helpful when you need to extract data from multiple files or similarly structured documents.

Examples of batch-processing tools include Talend Open Studio, Apache Nifi, and Alteryx.

Open Source Tools

These software applications are available for free and can be modified and customized by users. Open-source data extraction tools are popular among small and medium-sized businesses with limited budgets and for those that cannot purchase expensive commercial software.

Examples of open-source data extraction tools include Apache Kafka, Apache Flume, and Pentaho Data Integration.

Cloud-based Tools

Cloud-based tools are hosted in the cloud and can be accessed via a web browser. You can use cloud-based data extraction tools when you need to extract data from remote sources or when you want to collaborate with other team members in different geographic locations.

Examples of cloud-based data extraction tools include AWS Glue, Azure Data Factory, and Google Cloud Dataflow.

Data extraction tools are integral to the data analysis process as they help businesses consolidate and analyze data from various sources. Remember that choosing the right data extraction tool can make a big difference in the efficiency and accuracy of your data analysis efforts.

Top Data Extraction Tools

Data extraction tools come in many different shapes and sizes. Whether you need to extract data from websites, documents, or social media platforms, there is a tool out there to help you automate the process and gain valuable insights from your data.

Scrapestorm

Scrapestorm is a web scraping tool that helps users extract data from various websites. It is designed to automate the process of web scraping and makes retrieving data easy for users without any coding experience. Scrapestorm can extract data from tables, charts, and maps, as well as from PDFs and images. Its user-friendly interface and flexible customization options make it a popular choice for businesses of all sizes.

Altair Monarch

Altair Monarch is a data extraction tool that allows users to extract data from various sources, including PDFs, spreadsheets, and databases. It uses machine learning algorithms to automatically identify and extract data from unstructured documents, which helps reduce the manual effort required for data extraction. As a result, Altair Monarch is particularly useful for businesses that regularly extract data from large volumes of documents.

Klippa

An OCR-based data extraction tool, Klippa automates document processing. It can extract data from various documents such as invoices, receipts, and contracts. It uses machine learning algorithms to recognize and extract data from these documents and exports the extracted data to various formats such as Excel, JSON, and XML. Klippa is particularly useful for businesses that need to extract data from large volumes of documents in a short period.

NodeXL

NodeXL is a data analysis and visualization tool for social media networks. It allows users to extract data from various social media platforms, such as Twitter, Facebook, and LinkedIn, and visualize it in graphs and charts. NodeXL is particularly good for businesses that need to analyze their customers' and competitors' social media behavior. It is popular among many companies due to its user-friendly interface and advanced features.

What makes data extraction challenging?

Even as a critical part of the data analysis process, data extraction also comes with several challenges that can make it difficult for businesses to extract and use data effectively. Below are some of the biggest challenges in data extraction:

Data Formatting Issues

Data can come in various formats and structures, making it difficult to extract and standardize. It may be incomplete, inconsistent, or contain errors, requiring extensive data cleaning and transformation.

Data Security Concerns

Extracting data can pose security risks if it involves sensitive or confidential information. Businesses must take appropriate measures to ensure that the data is secure during extraction and transfer.

Technical Limitations

Extracting data can be challenging if the data is stored in legacy systems or different data sources. It may require specialized technical skills or software tools to extract and integrate from multiple sources.

Legal Compliance

Data extraction must comply with legal and regulatory requirements, such as data privacy laws like GDPR and CCPA. Failure to comply with these regulations can result in hefty fines and reputational damage.

Overcoming these requires a strategic approach to data extraction, including using advanced tools and techniques and a commitment to data quality and security. By addressing these challenges, businesses can unlock the full potential of their data and gain a competitive edge in the marketplace.

Best Practices for Data Extraction

Data extraction is a crucial step in data analysis, so it's essential to follow the best practices to ensure accuracy, quality, and success.

Identify the data sources

Begin by identifying all relevant data sources and understanding the structure of the data. It will help you avoid missing essential data and wasting resources on irrelevant data sources.

Clean and preprocess the data

To ensure accuracy and consistency, clean and pre-process the data before extraction. Cleaning and preprocessing data can help identify and remove inconsistencies, errors, and outliers that may affect the quality of the extracted data.

Store and backup the data

Storing and backing up data is crucial to ensure that the data is accessible and secure. Data storage solutions like cloud-based services provide flexibility and scalability and can help protect data from loss or damage.

Secure the data

Implement robust security measures, such as data encryption, access controls, and regular security audits. Data security should be a top priority to protect sensitive data and prevent data breaches.

Conclusion

Data extraction can be challenging, but with the right tools and techniques, it can be easy and accessible to beginners. We have covered the basics of data extraction in this beginner's guide, from defining the term to discussing different methods and tools for data extraction.

The future of data extraction is exciting as we see advances in machine learning and artificial intelligence that enable more efficient and accurate data extraction from various sources. As the amount of data available continues to grow, questions such as what types of big data can we extract value from and how will arise. As a result, mastering data extraction will become an increasingly important skill for businesses and individuals alike.

It is crucial to approach data extraction with a clear understanding of the goals and needs of your project, as well as a willingness to experiment with different tools and methods to find what works best. By following the tips and techniques outlined in this guide, you can be well on your way to successfully extracting and utilizing valuable data.

Frequently Asked Questions - FAQs:

What is the meaning of data extraction?

Data extraction is the process of retrieving data from various sources, such as databases, websites, or applications and transforming it into a usable format for analysis. The extracted data can then be used to generate insights and support decision-making.

What is an example of data extraction?

An example of data extraction is scraping product information from an e-commerce website. The extraction process involves identifying the relevant data fields, such as product name, price, and description, and retrieving the data using web scraping tools or APIs.

What is data extraction used for?

Data extraction is used to retrieve data from multiple sources to support various business functions, such as data analysis, reporting, and decision-making. This will be used to generate insights, develop predictive models, and improve business operations and strategies.

What are data extraction techniques?

There are several data extraction techniques, such as web scraping, API integration, and database querying. Web scraping involves extracting data from websites using automated tools, while API integration allows data to be retrieved from web-based applications. Database querying is the process of retrieving data from structured databases using SQL queries.

References

(2020, May 26). Data Extraction Techniques. Rosoka.

https://www.rosoka.com/blog/data-extraction-techniques

(2022, August 12). What is the Difference between Data Mining and Data Extraction? AmyGB.ai.

https://www.amygb.ai/blog/difference-between-data-mining-and-data-extraction

(2022, November 12). What is Data Extraction? Why is it Important? The ECM Consultant.

https://theecmconsultant.com/what-is-data-extraction

(n.d.). ETL: Extract, Transform, Load. Talend.

https://www.talend.com/resources/what-is-etl/

(n.d.). Types of ETL Tools. Dremio.

https://www.dremio.com/resources/guides/adv-types-etl-tools/

(n.d.). What Are APIs and How Do They Work? MuleSoft.

https://www.mulesoft.com/api-university/what-are-apis-and-how-do-they-work

(n.d.). What is Change Data Capture (CDC)? Qlik.

https://www.qlik.com/us/change-data-capture/cdc-change-data-capture

(n.d.). What is Data Extraction? Docparser.

https://docparser.com/blog/what-is-data-extraction/

(n.d.). What Is ETL (Extract, Transform, Load)? Oracle.

https://www.oracle.com/ph/integration/what-is-etl/

Deshpande, I. (2021, March 16). What Is Customer Data? Definition, Types, Collection, Validation and Analysis. Spiceworks.

https://www.spiceworks.com/marketing/customer-data/articles/what-is-customer-data/

Eteng, O. (2023, January 27). What is Data Extraction? Everything You Need to Know. Hevo Data.

https://hevodata.com/learn/data-extraction/#usecase

Hillier, W. (2021, August 13). What is Web Scraping and How to Use It? CareeerFoundry.

https://careerfoundry.com/en/blog/data-analytics/web-scraping-guide/

IBM Cloud Education (2021, June 29). Structured vs. Unstructured Data: What’s the Difference? IBM.

https://www.ibm.com/cloud/blog/structured-vs-unstructured-data