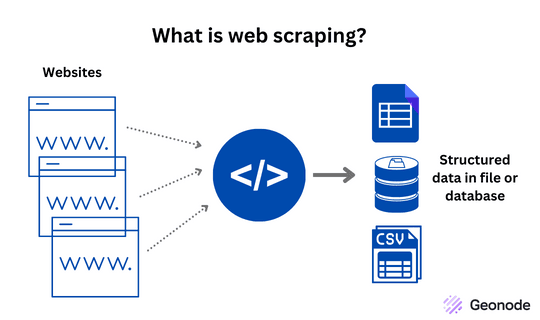

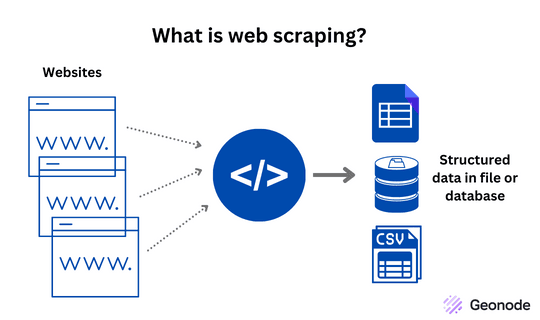

What is Web Scraping?

Web scraping, also known as web data extraction or web harvesting, is the process of extracting data from websites using automated software. It can be done either manually, like copying and pasting data by hand, or automatically, like extracting information through a web scraper.

Doing it by hand takes time and effort, especially if you want to scrape large volumes of data. This is why most users prefer to use web scrapers to do the job for them. This technology allows individuals and organizations to gather information from multiple sources quickly and efficiently.

What kinds of data can you scrape from the web?

There are many different types of data you can scrape from the web, including:

Text data. This includes anything from headlines, articles, and product descriptions to comments, reviews, and more.

Images and videos. You can scrape photos and videos from websites, including product images, infographics, and more.

Structured data. This type of data is organized in a specific format, such as tables, spreadsheets, and databases. Examples of structured data include product listings, real estate listings, and financial data.

Unstructured data. This type of data is not organized in a specific format and can include things like social media posts, comments, and more.

Metadata. This type of data provides information about other types of data, such as the date a webpage was last updated, the author of a post, and more.

By understanding the different types of data available for web scraping, you can determine which data is most useful for your specific needs, and develop a strategy for scraping that data effectively.

Is web scraping legal?

Web scraping is legal, although websites aren’t exactly keen on the idea of users scraping their sites. If they detect that you’re using a web scraper or any other automation tool on their site, they would immediately ban your account and blacklist your IP address.

If you want to learn about the legality of web scraping, you can read a thorough article about it here: Legality and Myths of Web Scraping.

How do web scrapers work?

At its core, web scraping is a process that involves sending a request to a website's server, downloading the HTML code, and then extracting the desired information. The request is usually made using a web scraper tool or a programmatic approach, such as using a programming language like Python.

Once the HTML code is received, the web scraper tool or program parses the code to find the specific data that you want to extract. This is often done using a technique called "data extraction," which uses rules or patterns to extract information from the HTML code.

The extracted data is then stored in a structured format, such as a spreadsheet or database, for further analysis or use.

What are the different types of web scrapers?

There are several different types of web scrapers, each with its own set of features and capabilities. In this guide, we'll explore the different types of web scrapers, so you can choose the one that's right for your needs.

Simple Screen Scrapers

Simple screen scrapers are the most basic type of web scrapers. They extract data from websites by copying and pasting information from the website into a local file. They're typically used for small-scale projects and don't require much technical knowledge.

Complex Screen Scrapers

Complex screen scrapers are more advanced than simple screen scrapers and are used for larger-scale projects. They extract data from websites by using scripts to automate the scraping process, making it faster and more efficient. Complex screen scrapers often use APIs to access data, allowing them to extract larger amounts of information.

Headless Browsers

Headless browsers are web scrapers that don't use a graphical user interface. They operate in the background and extract data from websites without displaying the website in a browser window.

Headless browsers are useful for web scraping because they're faster and more efficient than traditional web browsers, and they allow you to extract data from websites without having to load the entire page.

Web Crawlers

Web crawlers are a type of web scraper that's designed to traverse large websites, extracting information from multiple pages. They work by starting on a specific page and then following links to other pages, extracting information as they go.

Web crawlers are used for a wide range of tasks, from gathering information for search engines to monitoring websites for changes.

Self-built Web Scrapers

Self-built web scrapers are web scraping tools that individuals or organizations create themselves using programming languages like Python, Ruby, or JavaScript. This approach allows them to tailor their web scraper specifically to their needs, such as scraping a particular website, data type, or frequency.

Self-built web scrapers typically require more technical expertise and time compared to using an off-the-shelf solution. However, the advantage of this approach is that the resulting web scraper can be highly customized, providing greater control over the scraping process and resulting data.

Browser Extension Web Scrapers

A browser extension web scraper is a software tool that integrates directly into your web browser and automates the process of scraping data from websites. This type of scraper is designed to work seamlessly within your browser, giving you easy access to the data you need without having to switch between different programs or applications.

With a browser extension web scraper, you can easily select the data you want to scrape, and set the parameters for your scrape. The scraper will then do the rest. It will extract the data you need and present it in a clean and organized format, saving you time and effort compared to manual scraping.

User Interface Web Scrapers

A UI web scraper works by presenting the user with an interface where they can select the data they want to scrape. The user can interact with the interface, select the data they want to extract, and the scraper will do the rest. It can automatically crawl through the website, extract the data, and save it in a structured format like CSV, Excel, or a database.

One of the key benefits of using a UI web scraper is that it eliminates the need for coding knowledge. You can simply point and click to select the data you want to extract. This makes it an excellent option for those who want to perform simple scraping tasks without the need for technical expertise.

Another advantage of UI web scrapers is their ease of use. They are designed to be user-friendly, and you can get started quickly without having to learn new software. Simply follow the steps, and you'll have your data in no time.

Cloud Web Scrapers

A cloud web scraper is a tool that allows you to run web scraping tasks on a cloud-based infrastructure. Instead of using your computer to perform the scraping, you can utilize the processing power of remote servers in the cloud. This means you can scrape websites at scale and handle larger volumes of data without worrying about your local hardware limitations.

One of the main advantages of using cloud web scrapers is the ability to access a virtually unlimited amount of resources, including storage, processing power, and bandwidth. This means you can scrape even the largest and most complex websites without worrying about performance issues.

Another advantage is the ability to run web scraping tasks 24/7 without interruption, even if your computer is turned off. This is because cloud web scrapers can be configured to run on remote servers that are always powered on.

Local Web Scrapers

Local web scrapers are a type of web scraping tool that runs on your computer, rather than on a remote server in the cloud. It is a good choice for small-scale web scraping projects, where you only need to extract a limited amount of data. They are also a good option if you have concerns about data privacy or if you need to scrape websites that are not accessible from the public internet.

One of the main advantages of using a local web scraper is that it is simple to set up and use. You don't need to have any specialized knowledge or technical skills to get started, and you can start scraping data right away.

Another advantage is that you have full control over the scraping process. You can configure the scraper to extract data in the format you need, and you can also set the frequency and schedule of the scraping tasks.

What are popular programming languages for web scraping?

Many programming languages can be used for web scraping, each with its strengths and weaknesses. We'll be exploring some of the most popular programming languages for web scraping.

Python: Python is one of the most popular programming languages for web scraping. It has a large and active community, which has developed many libraries and tools specifically for web scraping. BeautifulSoup and Scrapy are two of the most widely used Python libraries for web scraping.

Ruby: Ruby is another popular programming language for web scraping. It has a large and active community, and there are many libraries and tools available for web scraping. Nokogiri is a popular Ruby library for web scraping.

JavaScript: JavaScript is a popular language for web scraping because it is used to power many websites. With the rise of Node.js, JavaScript has become a popular choice for server-side web scraping. Puppeteer is a popular JavaScript library for web scraping.

PHP: PHP is a popular programming language for web scraping because it is widely used for building websites. There are many libraries and tools available for web scraping with PHP. Simple HTML DOM is a popular PHP library for web scraping.

Java: Java is a popular programming language for web scraping because of its efficiency and scalability. There are many libraries and tools available for web scraping with Java, including JSoup and HtmlUnit.

What are web scrapers used for?

Web scraping is used for a variety of purposes, including:

Data Collection: Can be used to collect large amounts of data from websites and turn it into structured information that can be used for analysis and research. This data can be used to make informed business decisions, track industry trends, and more.

Brand Monitoring: Can be used to monitor customer opinion by collecting publicly available customer reviews. This information can help you maintain and even boost your online reputation.

Real Estate Listings: Can be used to gather information about various real estate data, including property price, property type, property location, and many more.

Price Monitoring: Can be used to monitor prices on e-commerce websites and track competitor pricing. This information can be used to adjust your pricing strategy and stay competitive.

Lead Generation: Can be used to gather information about potential customers and build targeted lists for sales and marketing purposes.

Social Media Monitoring: Can be used to monitor social media for mentions of your brand, competitors, or industry, and gather valuable insights and data about your audience.

Price Intelligence: Can be used to collect and compare global product pricing information and gather it all into one place.

News and Content Monitoring: Can be used to gather information from multiple sources, including news sites, and compile it into one place, making it easier to access and analyze.

Job Listings: Can be used to gather job listings from various websites and compile them into one place, making it easier to find job opportunities.

Travel Aggregation: Can be used to collect information from many hotel and flight company websites and compile it into one piece.

What is a web scraping tool?

Web scraping tools are software programs designed to automatically extract data from websites. They are used to gather information for various purposes, including data analysis, research, price monitoring, and more. We'll introduce you to the world of web scraping tools and explain how they can help you achieve your goals.

How do web scraping tools work?

Web scraping tools work by sending a request to a website and then extracting the data from the HTML code that the website returns. This data can then be stored and analyzed to gain insights or used for other purposes.

What are the benefits of using a web scraping tool?

The main benefits of using a web scraping tool include saving time, reducing manual labor, and gathering large amounts of data from multiple sources. With a web scraping tool, you can quickly and easily gather the data you need, freeing up time for other important tasks.

What are the different types of web scraping tools?

There are several different types of web scraping tools, including cloud-based scrapers, local scrapers, and browser extensions. Each type of tool has its unique features and benefits, and the right tool for you will depend on your specific needs and goals.

How to choose a web scraping tool?

When choosing a web scraping tool, consider factors such as ease of use, speed, and cost. It's also important to consider the type of data you need to scrape, as some tools may not be able to handle complex or dynamic websites.

What tools can you use to scrape the web?

Here are some of the most popular web scraping tools available:

Beautiful Soup: Beautiful Soup is a Python library that makes it easy to scrape data from HTML and XML files. It's one of the most commonly used web scraping tools and is great for handling complex and poorly formatted websites.

Scrapy: Scrapy is another Python library for web scraping. It's more powerful than Beautiful Soup, but also more complex. If you have experience with Python, Scrapy is a great option for large-scale web scraping projects.

Octoparse: Octoparse scraper is a Windows-based tool for web scraping that requires no coding. It's easy to use and provides a visual interface for building web scraping workflows.

Parsehub: Parsehub is a web-based tool for web scraping that requires no coding. It's similar to Octoparse but works on any operating system with a web browser.

WebHarvy: WebHarvy is another visual web scraping tool that doesn't require any coding. It's easy to use and supports both Windows and Mac.

Scraper API: Geonode’s flexible web scraper is designed to make web scraping easy and efficient for developers, whether they are a beginner or a veteran

These are just a few of the many web scraping tools available. When choosing a tool, it's important to consider your technical skill level, the size of the projects you'll be working on, and your specific needs. No matter which tool you choose, you're sure to find web scraping to be a valuable and efficient way to collect data from the web.

What is the web scraping process?

The web scraping process can be broken down into several steps:

- Determine the target website and data to be scraped: The first step is to identify the website from which you want to extract data and the specific data you want to collect.

- Inspect the website: Before you start scraping, it's important to inspect the website to understand how it is structured and what data is available. This can help you identify the right tools and techniques to use for the scraping process.

- Choose a scraping tool or write code: There are many tools available for web scraping, including both open-source and paid tools. Some popular choices include BeautifulSoup, Scrapy, and Selenium. Alternatively, you can write your code to scrape the website using a programming language like Python or Java.

- Write the code or configure the scraping tool: Once you have chosen a tool or decided to write your code, you'll need to configure it to extract the data you want from the website. This may involve writing code to navigate the website and extract specific elements, or configuring the scraping tool to identify the data you want.

- Run the scraper: Once your code or scraping tool is configured, you can run the scraper to extract the data from the website. Depending on the size of the website and the amount of data you want to collect, this process may take several minutes or even hours.

- Clean and analyze the data: The final step is to clean and analyze the data you have collected. This may involve removing irrelevant data, formatting the data into a usable format, and performing any necessary analysis to gain insights from the data.

By following these steps, you can effectively scrape data from websites and use it to gain insights or support your business decisions.

Final Thoughts

Web scrapers are useful tools that help you get the data you need quickly and efficiently. There are many different types of web scrapers and you can use them to get various kinds of data.

With the correct tools, you can optimize the gathering process and save yourself a lot of time and effort. When choosing a tool, it's important to consider your technical skill level, the size of the projects you'll be working on, and your specific needs.

If you want to try out a great web scraping tool, you can check out Geonode’s Scraper API. You can try it out for free and see if it works for you and your needs.